TL;DR

- India’s AI cloud and datacenter market is growing rapidly, with demand for AI-ready datacenters scaling across edge, core, and hyperscale cloud nodes.

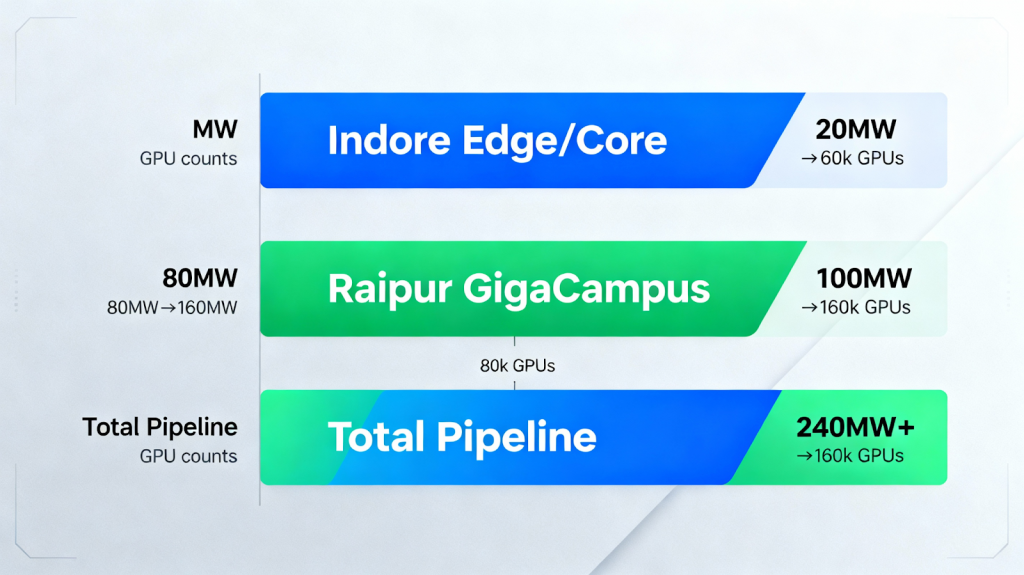

- RackBank leads with scalable data centers in Raipur and Indore, offering 80MW AI-optimized capacity, housing 60,000+ GPUs, with flexible rack densities and efficient liquid immersion cooling.

- Our infrastructure strategy connects edge AI compute for real-time inference with core hubs and GigaCampus datacenter ecosystems to meet demanding AI workloads and data sovereignty needs.

- Green energy, innovative cooling, and tier-2 city locations enable cost leadership and sustainability in India’s AI-powered digital economy.

- For CTOs, embracing hybrid architectures spanning edge to GigaCampus will catalyze scalable AI deployments and the future of national AI innovation.

Leading India’s AI Datacenter Evolution

India’s AI infrastructure landscape is rapidly evolving. As a CTO at RackBank, I see the critical need to build agile, scalable, and sustainable AI datacenters capable of supporting high-density GPU workloads for AI model training and real-time inference. The AI datacenter India market is poised to reach nearly 1.8 GW capacity by 2027, driven by digital transformation and AI adoption across sectors. RackBank’s strategic approach focuses on creating interconnected AI compute hubs from edge sites to hyperscale datacenters to address these demands.

RackBank’s AI infrastructure offerings are anchored by our flagship hyperscale GigaCampus datacenter in Raipur. This facility started with an 80MW AI-optimized phase capable of housing over 60,000 GPUs with flexible rack densities from 50kW to 150kW per rack. It is built with a view to scale up to 160MW, aligning with NVIDIA’s roadmap for GPU architectures, and runs on clean energy supported by patented liquid immersion cooling technology that realizes up to 70% energy savings.

Edge to Core Integration: Foundation for Real-Time AI

Edge AI Infrastructure for Low Latency and Distributed AI

AI workloads at the edge are essential for latency-sensitive applications like smart cities, manufacturing, and financial services. RackBank’s approach leverages tier-2 city locations such as Indore and Raipur to deploy edge AI infrastructure strategically, enabling enterprises to run real-time AI inference closer to data sources while integrating seamlessly with core compute hubs.

This distributed architecture facilitates high-performance AI workloads with datacenter interconnects (DCIs) that bridge low-latency edge nodes with core clusters. The result is a hybrid AI architecture capable of scaling AI workloads from local inference to large-scale model training without sacrificing performance or data sovereignty.

Why Tier-2 Cities?

RackBank’s operational model emphasizes tier-2 cities due to cost efficiencies, availability of clean power, and favorable government incentives. Constructing in Indore and Raipur reduces operational expenses by up to 2-3 times compared to metro areas while maintaining high-performance standards. The local ecosystems empower India’s AI-powered digital economy with the added benefit of regulatory compliance and reduced latency nationwide.

GigaCampus: Hyperscale Datacenter for National AI Needs

A Green, Scalable AI Datacenter Campus

The GigaCampus in Raipur is India’s first purpose-built AI datacenter campus spanning over 13.5 acres in a Special Economic Zone (SEZ). Designed with future-proof AI infrastructure modernization in mind, it utilizes RackBank’s in-house liquid immersion cooling and rear door heat exchanger systems that ensures energy-efficient operations while supporting high-density AI workload.

At full scale, the GigaCampus aims to house over 100,000 GPUs supporting AI cluster architecture suited for the most demanding workloads like LLM training and AI compute scaling. This facility offers enterprises flexibility in rack density and scales infrastructure rapidly with a delivery timeline of just nine months, significantly faster than typical metro builds.

Enhancing India’s Sovereign AI Cloud

With data privacy and sovereignty top of mind in India, RackBank’s infrastructure supports sovereign cloud platforms enabling governments and enterprises to securely deploy AI workloads on Indian soil. By combining location advantages with technology leadership, RackBank is setting a foundation for reliable, secure, and efficient AI data pipelines across the country.

FAQs

- What is RackBank’s AI infrastructure strategy?

RackBank focuses on scalable AI-ready datacenters that connect edge AI infrastructure in tier-2 cities with hyperscale GigaCampus facilities, delivering distributed, low-latency AI compute for India’s growing AI demands. - How does RackBank link edge, core, and GigaCampus for AI workloads?

Through strategic placement of datacenters with datacenter interconnects, real-time edge AI inference integrates seamlessly with powerful core compute hubs and the GigaCampus for large-scale training and hybrid AI architectures. - Why choose RackBank for AI infrastructure deployment in India?

RackBank offers advanced GPU cloud India facilities with patented immersion cooling tech, substantial energy savings, and rapid build timelines, situated in cost-effective tier-2 cities and backed by government incentives. - What benefits do India’s tier-2 city datacenters offer for AI workloads?

Lower operational costs, faster project delivery, and reduced latency across the country while supporting sovereign cloud requirements and AI infrastructure modernization. - How is RackBank supporting India’s AI-powered digital economy?

By building the largest green AI datacenter campus and delivering secure, scalable infrastructure that powers national AI initiatives and enterprise adoption.

Vision for India’s AI Future

For India’s AI journey, scalable and agile infrastructure is paramount. RackBank’s strategy of linking optimized edge nodes, robust core hubs, and hyperscale GigaCampus datacenters creates a resilient AI compute fabric that will drive innovation and sovereignty for decades. This hybrid AI architecture not only meets immediate needs for AI workloads at the edge and hyperscale but also positions India at the forefront of the global AI revolution.

As CTO, I urge strategic leaders to embrace these scalable, sustainable infrastructures today to future-proof AI deployments capable of supporting trillion-parameter models and the next wave of AI-driven digital transformation in India.

RackBank’s focus on connecting edge, core, and GigaCampus is spot on. As AI workloads grow, it’s crucial to have infrastructure that seamlessly scales across these nodes. It will be interesting to see how these interconnected hubs drive innovation and reduce latency in AI deployments.