For years, India’s AI journey followed a simple rule. Push workloads to the cloud, scale compute centrally, and optimize cost later. That model worked when AI was mostly batch processing, analytics, or offline training.

That era is ending.

Today’s AI workloads are real time. Fraud detection that cannot wait. Manufacturing systems that cannot pause. Healthcare inference that must respond instantly. In these scenarios, the bottleneck is no longer GPU availability alone. It is distance, delay, and control.

This is why Edge AI colocation is emerging as a critical layer of India’s AI infrastructure stack.

Instead of asking “where can I get a compute,” Indian companies are now asking “how fast can my AI respond, and where does my data live.”

Why Edge AI Changes the Infrastructure Equation

Traditional centralized cloud models introduce unavoidable latency. Even with the best networks, round trip delays increase as workloads sit far from users, machines, or sensors.

Edge AI flips the model.

AI inference, preprocessing, and sometimes even training move closer to the source of data. This requires a new class of edge AI data centers in India that are purpose-built for latency sensitive workloads.

Edge AI is not about replacing centralized cloud. It is about complementing it with infrastructure that understands geography, regulation, and response time.

What is driving this shift in India

| Driver | Impact on AI Infrastructure |

| Real time applications | Millisecond latency requirements |

| Data localization norms | Need for in-country processing |

| Smart manufacturing growth | AI at factories, not regions |

| Fintech scale | Fraud detection cannot wait for distant cloud |

| Healthcare digitization | Inference near hospitals and labs |

India’s AI growth is now deeply tied to physical proximity.

Edge AI Colocation Explained Simply

Edge AI colocation means hosting your AI infrastructure inside strategically located datacenters closer to users, factories, financial hubs, or enterprise campuses.

Instead of owning edge facilities yourself, you colocate AI-ready racks inside professional datacenters that offer power, cooling, security, and compliance.

This is why AI colocation services India are seeing rising adoption across startups and large enterprises alike.

What makes colocation different from edge cloud

| Aspect | Edge Cloud | Edge AI Colocation |

| Hardware control | Limited | Full control |

| Latency tuning | Shared | Dedicated |

| Compliance | Provider dependent | Enterprise governed |

| GPU choice | Restricted | Custom |

| Cost predictability | Variable | Stable |

For many AI teams, colocation offers the control of on-prem with the reliability of a professional datacenter.

Why Indian Startups Are Choosing Edge AI Colocation

For AI startups, the early bottleneck is rarely innovation. It is infrastructure friction.

Edge colocation removes that friction.

Key benefits for startups

- Low latency AI inference for user-facing applications

- Freedom to choose GPUs, accelerators, and networking

- Predictable costs compared to hyperscale cloud spikes

- Faster compliance readiness for regulated sectors

This makes colocation for AI startups a practical path to scale without burning cash or compromising performance.

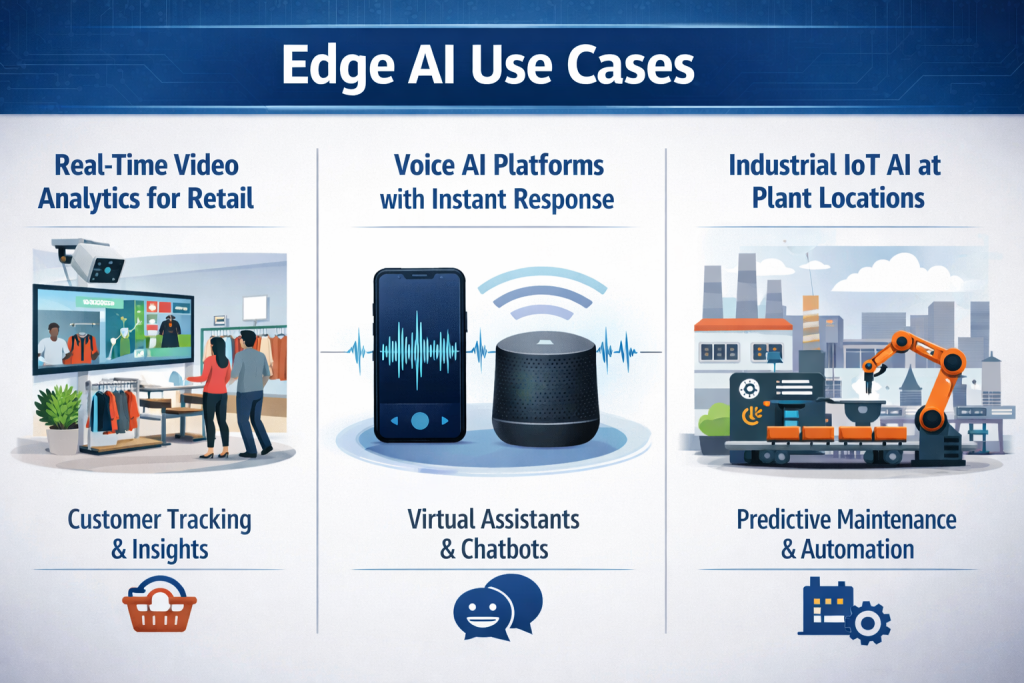

Example use cases

- Real time video analytics for retail

- Voice AI platforms with instant response needs

- Industrial IoT AI deployed at plant locations

Enterprises Are Re-architecting AI at the Edge

Large enterprises are no longer experimenting with AI. They are operationalizing it.

This changes infrastructure priorities.

Edge datacenters for enterprises enable AI to integrate directly with operations instead of sitting behind distant APIs.

Where enterprises are deploying edge AI today

| Sector | Edge AI Workloads |

| Manufacturing | Predictive maintenance, quality checks |

| Fintech | Real time fraud scoring |

| Healthcare | Diagnostics, imaging inference |

| Logistics | Route optimization, vision AI |

| Energy | Grid monitoring and forecasting |

These workloads demand low latency AI infrastructure that centralized cloud regions struggle to deliver consistently across India.

Colocation vs Cloud for Edge AI

This is not a cloud versus colocation debate. It is about workload alignment.

When cloud works

- Model training at scale

- Non latency sensitive analytics

- Global distribution use cases

When edge AI colocation works better

- Real time inference

- Regulatory sensitive data

- Factory floor and branch deployments

- Consistent performance requirements

This is why colocation vs cloud for Edge AI is becoming a practical architectural decision, not a philosophical one.

Most mature deployments today use a hybrid model combining centralized cloud with edge colocation.

How Colocation Reduces Latency for AI Workloads

Latency is cumulative. Network hops, processing delays, and shared infrastructure all add up.

Colocation reduces latency by:

- Physically shortening data travel distance

- Removing shared cloud overhead

- Allowing optimized networking stacks

- Enabling workload-specific tuning

This is why colocation reduces latency for AI workloads is one of the most searched questions among Indian AI teams today.

The Role of Edge AI Colocation in India’s AI Economy

India’s AI future will not be built only in large metro cloud regions.

It will be built in:

- Industrial corridors

- Tier 2 and Tier 3 cities

- Manufacturing zones

- Financial hubs

Edge AI deployment India is becoming a national infrastructure story, not just a technology choice.

As AI moves closer to where decisions happen, edge colocation becomes the backbone that supports it quietly and reliably.

Conclusion: Building AI That Responds, Scales, and Stays Compliant

AI success in India is no longer just about model accuracy or GPU count.

It is about responsiveness, locality, and control.

Edge AI colocation offers a path where startups can scale without friction, and enterprises can deploy AI with confidence. It brings AI closer to users, machines, and decisions while keeping infrastructure predictable and compliant.

For teams evaluating secure Edge AI colocation in India, the conversation should start early, before latency and control become blockers instead of design choices.

If you are building or scaling AI workloads that cannot afford delay, edge-ready colocation infrastructure is no longer optional. It is foundational.

Explore AI-ready colocation environments built for real time, latency-sensitive workloads.

Infrastructure that works where your AI actually operates.